|

Plastics. We think so much about the exponential growth of computer technology that we forget about other things growing exponentially.

It's like that line from the 1967 movie, "I just want to say one word to you... just one word: Plastics."

We may be paying more attention to computers, but plastics haven't gone away. They've kept growing exponentially.

I'm going to quote a boatload of stuff from this PlastChem report, but, in actuality it's going to look like a lot but in reality it's about 2 pages from an 87-page report. Actually once you add in all the appendices it's a 181-page report. And I'm going to quote these parts rather than summarize because I can't think of a way to compress this down much further, so since I can't do a better job of it myself I'm just going to present the choice quotes from the report. Here we go:

"The plastics economy is one of the largest worldwide. The global plastic market was valued at 593 billion USD in 2021. In the same year, the global trade value of plastic products was 1.2 trillion USD or 369 million metric tons, China, the USA, and European states are the major plastic-producing countries with emerging economies experiencing a rapid expansion of local production capacities. The plastics economy is tightly embedded in the petrochemical sector, consuming 90% of its outputs to make plastics. This, in turn, creates strong linkages with the fossil industry, as 99% of plastic is derived from fossil carbon, production mostly relies on fossil energy, and the plastic and fossil industries are economically and infrastructurally integrated."

"Plastic production increases exponentially. The global production of plastics has doubled from 234 million tons in 2000 to 460 million tons in 2019 and its demand grows faster that cement and steel. On average, production grew by 8.5% per year from 1950-2019. Business-as-usual scenarios project that plastic production will triple from 2019 to 2060 with a growth rate of 2.5-4.6% per year, reaching 1230 million tons in 2060. By 2060, 40 billion tons of plastic will have been produced, with about 6 billion tons currently present on Earth."

"The projected increase in plastic use is driven by economic growth and digitalization across regions and sectors. China is expected to remain the largest plastic user, but plastic demand is expected to grow stronger in fast-growing regions, such as Sub-Saharan Africa, India, and other Asian countries. Plastic use is projected to increase substantially across all sectors until 2060, and polymer types used in applications for packaging, construction and transportation make up the largest share of the projected growth.14 Importantly, the OECD predicts that petroleumbased, non-recycled plastics will continue to dominate the market in 2060. Single-use plastics, currently 35-40% of global production, are expected to grow despite regional phase-outs."

"Globally, seven commodity polymers dominate the plastics market. These include polypropylene (PP, 19% of global production), low-density polyethylene (LDPE, 14%), polyvinylchloride (PVC, 13%), high-density polyethylene (HDPE, 13%), polyethylene terephthalate (PET, 6%), polyurethane (PUR, 6%), and polystyrene (PS, 5%). Over 80% of Europe's total polymer demand is met by these, mostly in virgin form). Their usage varies by sector, with HDPE, LDPE, PET, and PP mainly being applied for packaging, and PS and PVC in construction."

"Plastic waste generation is expected to almost triple by 2060. In line with the growth in plastic use, the future plastic waste generation is projected to almost triple, reaching 1014 million tons in 2060. Waste generated from short-lived applications, including packaging, consumer products and textiles, and plastic used in construction are expected to dominate. The latter is relevant because long-lived applications will continue to produce 'locked-in' plastic waste well into the next century. Despite some improvements in waste management and recycling, the OECD projects that the amount of mismanaged plastic waste will continue to grow substantially and almost double to 153 million tons by 2060."

"The scale of plastic pollution is immense. The OECD estimates that 22 million tons of plastic were emitted to the environment in 2019 alone.14 While there are uncertainties in these estimates, they illustrate the substantial leakage of plastics into nature. Accordingly, approximately 140 million tons of plastic have accumulated in aquatic ecosystems until 2019. Emissions to terrestrial systems amount to 13 million tons per year (2019), but the accumulating stocks remain unquantified due to data gaps. While mismanaged waste contributes 82% to these plastic emissions, substantial leakages originate further upstream and throughout the plastic life cycle, such as from the release of micro- and nanoplastics. While the latter represent a relatively small share in terms of tonnage, the number of these particles outsizes that of larger plastic items emitted to nature."

"Plastic pollution is projected to triple in 2060. A business-as-usual scenario with some improvement in waste management and recycling predicts that the annual plastic emissions will double to 44 million tons in 2060. This is in line with other projections which estimate annual emissions of 53-90 million tons by 2030 and 34-55 million tons by 2040 to aquatic environments. According to the OECD, the accumulated stocks of plastics in nature would more than triple in 2060 to an estimated amount of 493 million tons, including the marine environment (145 million tons, 5-fold increase) and freshwater ecosystems (348 million tons, 3-fold increase). Since the impacts of plastic pollution are diverse and occur across the life cycle of plastics, the OECD concludes that 'plastic leakage is a major environmental problem and is getting worse over time. The urgency with which policymakers and other societal decision makers must act is high.'"

"Plastic monomers (e.g., ethylene, propylene, styrene) are mainly derived from fossil resources and then reacted (or, polymerized) to produce polymers (e.g., polyethylene, polypropylene, and polystyrene) that form the backbone of a plastic material. A mixture of starting substances (i.e., monomers, catalysts, and processing aids) is typically used in polymerization reactions. To produce plastic materials, other chemicals, such as stabilizers, are then added. This creates the so-called bulk polymer, usually in the form of pre-production pellets or powders. The bulk polymer is then processed into plastic products by compounding and forming steps, like extrusion and blow molding. Again, other chemicals are added to achieve the desired properties of plastic products, in particular additives. Importantly, such additives were crucial to create marketable materials in the initial development of plastics, and a considerable scientific effort was needed to stabilize early plastics. Throughout this process, processing aids are used to facilitate the production of plastics."

"At the dawn of the plastic age, scientists were unaware of the toxicological and environmental impacts of using additives in plastics. Their work to make plastic durable is essentially what has made plastics both highly useful, but also persistent and toxic."

"The growth in additives production mirrors that of plastics. The amount of additives in plastics can significantly vary, ranging from 0.05-70% of the plastic weight. For example, antioxidants in PE, PS, and ABS (acrylonitrile butadiene styrene) account for 0.5-3% of their weight. Light/UV stabilizers in PE, PP, and PVC constitute 0.1-10% by weight. Flame retardants can make up 2-28% of the weight, while plasticizers in PVC can be as high as 70% by weight. About 6 million tons of additives have been produced in 2016 and the annual growth rate is 4% in the additives sector. Accordingly, additive production can be expected to increase by 130-280 thousand tons per year. By 2060, the joint production volume of a range of additive classes is the projected to increase by a factor of five, closely mirroring the growth in overall plastic production."

"Plastics also contain non-intentionally added substances. Non-intentionally added substances include impurities, degradation products, or compounds formed during the manufacturing process of plastics, which are not deliberately included in the material. Examples include degradation products of known additives (e.g., alkylphenols from antioxidants) and polymers (e.g., styrene oligomers derived from polystyrene). Unlike intentionally added substances (IAS), which are in principle known and therefore can be assessed and regulated, non-intentionally added substances are often complex and unpredictable. Thus, their identity remains mostly unknown and these compounds, though present in and released from all plastics, cannot easily be analyzed, assessed, and regulated. Despite these knowledge gaps, non-intentionally added substances probably represent a major fraction of plastic chemicals."

"The number and diversity of known plastic chemicals is immense. A recent analysis by the United Nations Environment Programme suggests that there are more than 13 000 known plastic chemicals, including polymers, starting substances, processing aids, additives, and non-intentionally added substances. The main reason for such chemical complexity of plastics is the highly fragmented nature of plastic value chains that market almost 100 000 plastic formulations and more than 30 000 additives, 16,000 pigments, and 8000 monomers. While this represents the number of commercially available constituents of plastics, not necessarily the number of unique plastic chemicals, it highlights that the diversity of the plastics sector creates substantial complexity in terms of plastic chemicals."

"A full overview of which chemicals are present in and released from plastics is missing, mostly due to a lack of transparency and publicly available data. Nonetheless, the available scientific evidence demonstrates that most plastic chemicals that have been studied are indeed released from plastic materials and products via migration into liquids and solids (e.g., water, food, soils) and volatilization into air. Additional chemical emissions occur during feedstock extraction and plastic production as well as at the end-of-life (e.g., during incineration). This is problematic because upon release, these chemicals can contaminate natural and human environments which, in turn, results in an exposure of biota and humans."

"Most plastic chemicals can be released. The release of chemicals from plastics has been documented in a multitude of studies, especially in plastic food contact materials, that is, plastics used to store, process or package food. A systematic assessment of 470 scientific studies on plastic food packaging indicates that 1086 out of 1346 analyzed chemicals can migrate into food or food simulants under certain conditions. Accordingly, 81% of the investigated plastic chemicals are highly relevant for human exposure. Newer research with advanced methods to study previously unknown plastic chemicals illustrates that this probably represents the tip of the iceberg. Studies using so-called nontargeted or suspect screening approaches show that commonly more than 2000 chemicals leach from a single plastic product into water. While less information is available on non-food plastics, this highlights two important issues. Firstly, plastics can release a large number of chemicals which, secondly, then become relevant for the exposure of biota, including humans (termed 'exposure potential' in this report)."

"Many plastic chemicals are present in the environment. Upon release, plastic chemicals can enter the environment at every stage of the plastic life cycle. Accordingly, plastic chemicals are ubiquitous in the environment due to the global dispersal of plastic materials, products, waste, and debris. For instance, a recent meta-analysis suggests that more than 800 plastic chemicals have been analyzed in the environment. However, this evidence is fragmented, and a systematic assessment of which compounds have been detected in the environment is lacking. Yet, the evidence on well-studied plastic chemicals indicates that these are present in various environments and biota across the globe, including remote areas far away from known sources. Examples include many phthalates, organophosphate esters, bisphenols, novel brominated flame retardants, and benzotriazoles. Based on the existing evidence on well-researched compounds, it is prudent to assume that many more plastic chemicals are omnipresent in the natural and human environment, including in wildlife and humans."

"Humans are exposed to plastic chemicals across the entire life cycle of plastics. This ranges from the industrial emissions during production, affecting fence line communities, to the releases during use, affecting consumers, and at the end-of-life, including waste handling and incineration. These releases have resulted in extensive exposures of humans to plastic chemicals. For example, many phthalates, bisphenols, benzophenones, parabens, phenolic antioxidants as well as legacy brominated and organophosphate flame retardants have been detected in human blood, urine, and tissues in different global regions. Humans can be exposed to plastic chemicals directly, such as phthalates and other additives leaching from PVC blood bags used for transfusion or leaching into saliva in children mouthing plastic toys. Indirect exposure occurs through the ingestion of contaminated water and foodstuffs that have been in contact with plastics (e.g., processing, packaging). The inhalation and ingestion of plastic chemicals from air, dust and other particulate matter are other important routes of exposure. Importantly, research shows that women, children, and people in underprivileged communities often have higher levels of exposure."

"Non-human organisms are exposed to plastic chemicals. The scientific literature provides rich information on the exposure of wildlife to plastic chemicals, in particular on bisphenols and phthalates in terrestrial and aquatic ecosystems as well as persistent organic pollutants, and antioxidants in marine environments. The United Nations Environment Programme highlights a global biomonitoring study which showed that seabirds from all major oceans contain significant levels of brominated flame retardants and UV stabilizers, indicating widespread contamination even in remote areas. Beyond seabirds, various other species are exposed to plastic chemicals according to the United Nations Environment Programme, such as mussels and fish containing with high levels of hazardous chemicals like HBCDD (hexabromocyclododecane), bisphenol A, and PBDEs (polybrominated diphenyl ethers), suggesting plastics as a probable source. Land animals, including livestock, are exposed to chemicals from plastics, such as PBDEs in poultry and cattle. and phthalates in insects. Importantly, plastic chemicals can also accumulate plants, including those for human consumption. This highlights a significant cross-environmental exposure that spans from marine to terrestrial ecosystems and food systems. However, while research on plastic chemical in non-human biota is abundant, it remains fragmented and has not been systematically compiled and assesses thus far."

"Endocrine disrupting chemicals in plastics represent a major concern for human health. The plastic chemicals nonylphenol and bisphenol A were among the earliest identified compounds that interfere with the normal functioning of hormone systems. These findings marked the beginning of a broader recognition of the role of plastic chemicals in endocrine disruption and dozens have since been identified as endocrine disrupting chemicals. This includes several other bisphenols, phthalates (used as plasticizers), benzophenones (UV filters), and certain phenolic antioxidants, such as 2,4-ditertbutylphenol. For example, strong scientific evidence links bisphenols to cardiovascular diseases, diabetes, and obesity. Accordingly, there is a strong interconnection between plastic chemicals and endocrine disruption."

"Additional groups of plastic chemicals emerge as health concern. The Minderoo-Monaco Commission's recent report comprehensively assesses the health effects of plastics across the life cycle, including plastic chemicals. In addition to phthalates and bisphenols, the report highlights per- and polyfluoroalkyl substances (PFAS) widely utilized for their non-stick and water-repellent properties. PFAS are strongly associated with an increased risk of cancer, thyroid disease, and immune system effects, including reduced vaccine efficacy in children. Additional concerns pertain to their persistence and their tendency to bioaccumulate in humans. In addition, brominated and organophosphate flame retardants have been linked to neurodevelopmental effects and endocrine disruption, adversely affecting cognitive function and behavior in children, as well as thyroid and reproductive health. Several other plastic chemicals are known to cause harm to human health, for example because they are mutagens (e.g., formaldehyde) or carcinogens with other modes of action, like melamine."

"Plastic chemicals also impact human health when released from production and disposal sites. These more indirect effects include the contribution of plastic chemicals to water and air pollution across the life cycle. For instance, chlorofluorocarbons, previously used as blowing agents in plastic production, can deplete the stratospheric ozone layer and thereby indirectly affect human health. Other issues include the promotion of antimicrobial resistance due to the dispersion of biocides transferring from plastics in the environment and the release of dioxins and PCBs from the uncontrolled burning of plastic wastes. The latter are especially toxic and persistent, and accumulate in the food chain, leading to increased human exposure."

"The health impacts of well-researched plastic chemicals are established. Arguably, there is a large body of evidence that links certain groups of plastic chemicals to a range of adverse health effects. These include but is not limited to bisphenols, phthalates, PFAS, and brominated and organophosphate flame retardants. Research focusses particularly on their endocrine disrupting effects, include adverse impacts on reproduction, development, metabolism, and cognitive function. However, it should be noted that research into other groups of plastic chemicals and other types of health effects remains largely fragmented and has rarely been systematically assessed. Here, initiatives such as the Plastic Health Map75 can support a more strategic approach."

"Plastic chemicals exert a host of adverse impacts on wildlife. This includes both acute and chronic toxicity in individual organisms and populations, as well as indirect effects across food webs. Ecotoxicological effects of heavy metals, such as cadmium and lead, as well as endocrine disrupting chemicals used in plastics, such as bisphenols, phthalates, and brominated flame retardants, have received the most research attention to date. Oftentimes, these endocrine disrupting chemicals induce environmental impacts at very low concentrations."

Jumping to page 24 for "Key Findings" of Part II of the report, "What is known about plastic chemicals":

"There are at least 16,000 known plastic chemicals. The report identifies 16,325 compounds that are potentially used or unintentionally present in plastics."

"There is a global governance gap on plastic chemicals. 6% of all compounds are regulated internationally and there is no specific policy instrument for chemicals in plastics."

"Plastic chemicals are produced in volumes of over 9 billion tons per year. Almost 4000 compounds are high-production volume chemicals, each produced at more than 1000 tons per year."

"At least 6300 plastic chemicals have a high exposure potential. These compounds have evidence for their use or presence in plastics, including over 1500 compounds that are known to be released from plastic materials and products."

"Plastic chemicals are very diverse and serve multiple functions. In addition to well-known additives, such as plasticizers and antioxidants, many plastic chemicals often serve multiple functions, for instance, as colorants, processing aids, and fillers."

"Grouping of plastic chemicals based on their structures is feasible. Over 10,000 plastic chemicals are assigned to groups, including large groups of polymers, halogenated compounds, and organophosphates."

Jumping to page 28, they have a visualization of the number of different plastic chemicals by use category:

3674 Colorants

3028 Processing aids

1836 Fillers

1741 Intermediates

1687 Lubricants

1252 Biocides

959 Monomers

897 Crosslinkers

883 Plasticizers

862 Stabilizers

843 Odor agents

764 Light stabilizers

723 Catalysts

595 Antioxidants

478 Initiators

389 Flame retardants

215 Heat stabilizers

205 Antistatic agents

128 Viscosity modifiers

103 Blowing agents

83 Solvents

74 Other additives

56 NIASs (non-intentionally added substances)

47 Others

31 Impact modifiers

On page 30 they have a table that gives you numbers by chemical category (with many groups missing because apparently there is a "long tail" of categories with fewer than 10 members that they didn't bother to include):

802 Alkenes

443 Silanes, siloxanes, silicones

440 PFAS (per- and polyfluoroalkyl substances)

376 Alkanes

202 Carboxylic acids salts

140 PCBs (polychlorinated biphenyls)

124 Aldehydes simple

89 Azodyes

75 Dioxines and furans

66 Alkylphenols

61 Ortho-phthalates

52 Aceto- and benzophenones

50 Phenolic antioxidants

45 PAHs (polycyclic aromatic hydrocarbons)

34 Bisphenols

29 Iso/terephthalates and trimellitates

28 Benzotriazoles

25 Ketones simple

24 Benzothiazole

22 Aromatic amines

20 Alkynes

20 Alkane ethers

18 Chlorinated paraffins combined

15 Aliphatic ketones

14 Aliphatic primary amides

11 Salicylate esters

10 Parabens

10 Aromatic ethers

Page 57 has a table of the number of chemicals by category considered hazardous. Page 61 has a table of the number of chemicals considered hazardous by usage category instead of chemical structure.

Last section is policy recommendations.

The report is 87 pages, but the document is 181 pages. The rest is a series of appendices, which they call the "Annex", which has the glossary, abbreviations, and detailed findings for everything summarized in the rest of the report. |

|

|

The end of classical computer science is coming, and most of us are dinosaurs waiting for the meteor to hit, says Matt Welsh.

"I came of age in the 1980s, programming personal computers like the Commodore VIC-20 and Apple IIe at home. Going on to study computer science in college and ultimately getting a PhD at Berkeley, the bulk of my professional training was rooted in what I will call 'classical' CS: programming, algorithms, data structures, systems, programming languages."

"When I was in college in the early '90s, we were still in the depth of the AI Winter, and AI as a field was likewise dominated by classical algorithms. In Dan Huttenlocher's PhD-level computer vision course in 1995 or so, we never once discussed anything resembling deep learning or neural networks--it was all classical algorithms like Canny edge detection, optical flow, and Hausdorff distances."

"One thing that has not really changed is that computer science is taught as a discipline with data structures, algorithms, and programming at its core. I am going to be amazed if in 30 years, or even 10 years, we are still approaching CS in this way. Indeed, I think CS as a field is in for a pretty major upheaval that few of us are really prepared for."

"I believe that the conventional idea of 'writing a program' is headed for extinction, and indeed, for all but very specialized applications, most software, as we know it, will be replaced by AI systems that are trained rather than programmed."

"I'm not just talking about CoPilot replacing programmers. I'm talking about replacing the entire concept of writing programs with training models. In the future, CS students aren't going to need to learn such mundane skills as how to add a node to a binary tree or code in C++. That kind of education will be antiquated, like teaching engineering students how to use a slide rule."

"The shift in focus from programs to models should be obvious to anyone who has read any modern machine learning papers. These papers barely mention the code or systems underlying their innovations; the building blocks of AI systems are much higher-level abstractions like attention layers, tokenizers, and datasets."

This got me thinking: Over the last 20 years, I've been predicting AI would advance to the point where it could automate jobs, and it's looking more and more like I was fundamentally right about that, and all the people who poo-poo'd the idea over the years in coversations with me were wrong. But while I was right about that fundamental idea (and right that there wouldn't be "one AI in a box" that anyone could pull the plug on if something went wrong, but a diffusion of the technology around the world like every previous technology), I was wrong about how exactly it would play out.

First I was wrong about the timescales: I thought it would be necessary to understand much more about how the brain works, and to work algorithms derived from neuroscience into AI models, and looking at the rate of advancement in neuroscience I predicted AI wouldn't be in its current state for a long time. While broad concepts like "neuron" and "attention" have been incorporated into AI, there are practically no specific algorithms that have been ported from brains to AI systems.

Second, I was wrong about what order. I was wrong in thinking "routine" jobs would be automated first, and "creative" jobs last. It turns out that what matters is "mental" vs "physical". Computers can create visual art and music just by thinking very hard -- it's a purely "mental" activity, and computers can do all that thinking in bits and bytes.

This has led me to ponder: What occupations require the greatest level of manual dexterity?

Those should be the jobs safest from the AI revolution.

The first that came to mind for me -- when I was trying to think of jobs that require an extreme level of physical dexterity and pay very highly -- was "surgeon". So I now predict "surgeon" will be the last job to get automated. If you're giving career advice to a young person (or you are a young person), the advice to give is: become a surgeon.

Other occupations safe (for now) against automation, for the same reason would include "physical therapist", "dentist", "dental hygienist", "dental technician", "medical technician" (e.g. those people who customize prosthetics, orthodontic devices, and so on), and so on. "Nurse" who routinely does physical procedures like drawing blood.

Continuing in the same vein but going outside the medical field (pun not intended but allowed to stand once recognized), I'd put "electronics technician". I don't think robots will be able to solder any time soon, or manipulate very small components, at least after the initial assembly is completed which does seem to be highly amenable to automation. But once electronic components fail, to the extent it falls on people to repair them, rather than throw them out and replace them (which admittedly happens a lot), humans aren't going to be replaced any time soon.

Likewise "machinist" who works with small parts and tools.

"Engineer" ought to be ok -- as long as they're mechanical engineers or civil engineers. Software engineers are in the crosshairs. What matters is whether physical manipulation is part of the job.

"Construction worker" -- some jobs are high pay/high skill while others are low pay/low skill. Will be interesting to see what gets automated first and last in construction.

Other "trade" jobs like "plumber", "electrician", "welder" -- probably safe for a long time.

"Auto mechanic" -- probably one of the last jobs to be automated. The factory where the car is initially manufacturered, a very controlled environment, may be full of robots, but it's hard to see robots extending into the auto mechanic's shop where cars go when they break down.

"Jewler" ought to be a safe job for a long time. "Watchmaker" (or "watch repairer") -- I'm still amazed people pay so much for old-fashioned mechanical watches. I guess the point is to be pieces of jewlry, so these essentially count as "jewler" jobs.

"Tailor" and "dressmaker" and other jobs centered around sewing.

"Hairstylist" / "barber" -- you probably won't be trusting a robot with scissors close to your head any time soon.

"Chef", "baker", whatever the word is for "cake calligrapher". Years ago I thought we'd have automated kitchens at fast food restaurants by now but they are no where in sight. And nowhere near automating the kitchens of the fancy restaurants with the top chefs.

Finally, let's revisit "artist". While "artist" is in the crosshairs of AI, some "artist" jobs are actually physical -- such as "sculptor" and "glassblower". These might be resistant to AI for a long time. Not sure how many sculptors and glassblowers the economy can support, though. Might be tough if all the other artists stampede into those occupations.

While "musician" is totally in the crosshairs of AI, as we see, that applies only to musicians who make recorded music -- going "live" may be a way to escape the automation. No robots with the manual dexterity to play physical guitars, violins, etc, appear to be on the horizon. Maybe they can play drums?

And finally for my last item: "Magician" is another live entertainment career that requires a lot of manual dexterity and that ought to be hard for a robot to replicate. For those of you looking for a career in entertainment. Not sure how many magicians the economy can support, though. |

|

|

The Federal Trade Commission banned 'non-compete' agreements, to take effect in August. But it was only a 3-2 vote, and business groups are going to sue to maintain their ability to have employees sign non-compete contracts.

According to the article, business groups say non-compete agreements "protect trade secrets" and "promote competitiveness," while the FTC says banning non-competes would "increase worker earnings by up to $488 billion over the next decade and will lead to the creation of more than 8,500 new businesses each year."

I wonder if that $488 billion can be realized over the next decade with artificial intelligence, not mentioned in the article, advancing so fast. Creation of 8,500 new businesses? The trend for decades is few businesses and more people working for large companies than small companies. I suppose you could blame non-compete agreements for that, in which case the claim that eliminating non-compete agreement creating new businesses would be true. Also when I hear "trade secrets," I think advanced technology, but the article claims Democrats claim non-compete agreements are used "even in lower-paying service industries such as fast food and retail." |

|

|

"In defense of AI art".

YouTuber "LiquidZulu" makes a gigantic video aimed at responding once and for all to all possible arguments against AI art.

His primary argument seems to me to be that AI art systems are learning art in a manner analogous to human artists -- by learning from examples from other artists -- and do not plagiarize because they do not copy exactly any artists' work. In contrast AI art systems are actually good at combining styles in new ways. Therefore, AI art generators are just as valid "artists" as any human artists.

Artists have no right to government protection from getting their jobs get replaced by technology, he says, because nobody anywhere else in the economy has any right to government protection to getting their jobs replaced by technology.

On the flip side, he thinks the ability of AI art generators to bring the ability to create art to the masses is a good thing that should be celebrated.

Below-average artists have no right to deprive people of this ability to generate the art they like because those low-quality artists want to be paid.

Apparently he considers himself an anarcho-capitalist (something he has in common with... nobody here?) and has has harsh words for people he considers neo-Luddites. He accuses artists complaining about AI art generators of being "elitist". |

|

|

For the first time, Alice Yalcin Efe is scared of AI as a music producer.

A professional music producer, been number one on BeatPort, has millions of streams on Spotify, played in big festivals and clubs, "yet for the first time I am scared of AI as a music producer."

When you're homeless, you can listen to AI mix the beat on the beach.

After that, she ponders what this means for all the rest of us. Those of us who aren't professional music producers. Well, I guess we can all be music producers now.

"Music on demand becomes literal. You feel heartbroken, type it in. Type in the genres that you want. Type in the lyrics that you want. Type in the mood that you want and then AI spits out the perfect ballad for you to listen."

"I think it's both incredible and horrifying at the same time. I honestly don't know what comes next. Will this kill the artists' soul, or will it give us just more tools to make even greater things?" |

|

|

Musician Paul Folia freaks out over Suno and Udio (and other music AI). Reminds me of the freak-out of visual artists a year ago. It appears AI is going to replace humans one occupation at a time and people will freak out when it's their turn. He estimates in a year AI music will be of high enough quality to wipe out stock music writing completely, producing tracks for a price no human can compete with ($0.02 and in minutes).

He experiments with various music styles an artists' styles and the part that impressed me the most was, perhaps surprisingly, the baroque music. After noting that the training data was probably easy to get because it's public domain, he says, "This m-f-er learned some *serious* harmony. Not like three chords and some singing." |

|

|

"For those who don't yet know from their other social media: a week ago the cryptographer Yilei Chen posted a preprint, eprint.iacr.org/2024/555, claiming to give a polynomial-time quantum algorithm to solve lattice problems. For example, it claims to solve the GapSVP problem, which asks to approximate the length of the shortest nonzero vector in a given n-dimensional lattice, to within an approximation ratio of ~n4.5. The best approximation ratio previously known to be achievable in classical or quantum polynomial time was exponential in n."

"If it's correct, this is an extremely big deal. It doesn't quite break the main lattice-based cryptosystems, but it would put those cryptosystems into a precarious position, vulnerable to a mere further polynomial improvement in the approximation factor. And, as we learned from the recent NIST competition, if the lattice-based and LWE-based systems were to fall, then we really don't have many great candidates left for post-quantum public-key cryptography! On top of that, a full quantum break of LWE (which, again, Chen is not claiming) would lay waste (in a world with scalable QCs, of course) to a large fraction of the beautiful sandcastles that classical and quantum cryptographers have built up over the last couple decades--everything from Fully Homomorphic Encryption schemes, to Mahadev's protocol for proving the output of any quantum computation to a classical skeptic."

Wow, that's quite a lot. Let's see if we can figure out what's going on here.

First of all, I hadn't heard of these "lattice" problems, but doing some digging, I found they've been of great interest to people working on quantum computers, because they're thought to be resistant to attacks from quantum computers. Quantum computers have this magical ability to use the superposition of wavefunctions to test "all combinations at once" for a mathematical problem, which could be finding a key that decrypts an encrypted message. This magical ability is harder to tap into than it sounds because, first of all, you need enough qubits (quantum bits -- the superposition bits that quantum computers use instead of the regular 0 or 1 bits of regular computers), and that's really hard because all the qubits have to be entangled, and maintaining a boatload of entangled qubits usually involves freezing atoms to near absolute zero and other such difficult things. And second of all, you have to find an algorithm -- quantum computers are not straightforward to program, and can only "run" "programs" written specifically for quantum computers, with algorithms that have been invented to solve a particular mathematical problem using quantum physics.

What supposedly makes "lattice" problems harder to solve than RSA, Elliptic Curve Cryptography, and so on, is that with lattices, you can have any number of dimensions. This increase in dimensionality cranks up the number of qubits required much faster than traditional algorithms such as RSA, Elliptic Curve Cryptography, and so on. So they stand a much better chance of outpacing the advancement of quantum computers. Also, nobody has ever come up with an algorithm for cracking lattice problems...

...until now, maybe. That's what this post is about. Possibly this Yilei Chen cryptographer has found an algorithm. The specific encryption algorithm that he may have found a way to crack with a quantum computer algorithm is called GapSVP. SVP stands for "shortest vector problem" and clicking through on the link will take you to a Wikipedia page that explains the mathematics behind it. However if you scroll down in the original post you'll see there is discussion of a bug in cryptographer Yilei Chen's algorithm. It is not known whether the algorithm can be fixed or whether this means GapSVP and LWE remain unbroken.

Speaking of LWE, the post also mentions LWE without giving a clue what "LWE" means. LWE stands for "Learning With Errors". In fact if you clicked through on GapSVP to the Wikipedia page, you can find a handy link to the Learning With Errors page at the bottom in the "See also" section. With LWE, you have an n-dimensional "ring" of integers -- called a "ring" instead of a "vector" because they are all modulo some prime number (remember we make this hard by making the number of dimensions and the size of the prime number huge) -- which you run through some secret linear transformation function and then perturb with some error, perhaps drawn from a Gaussian distribution. To crack the system you have to recover the secret linear transformation function. Mathematicians have proven LWE is equivalent to lattice problems and therefore is a lattice problem.

The mention of Mahadev's protocol, which you can find out all about by clicking through on that link, refers to a method of verifying that a quantum algorithm works using a classical computer. The protocol works by forcing the qubits into states that are predetermined ahead of time, and then verifying those states are achieved. Of course a classical computer cannot verify the output of a quantum computer for any given input. |

|

|

"AI is now dogfighting with fighter pilots in the air."

Well, that headline makes it sound like dogfighting exercises between human pilots and AI-piloted aircraft are happening now, but the article actually says, in a longwinded way, that this has been authorized and is something that should be happening soon. It has been done in simulation only so far.

The AI-piloted aircraft is the X-62A, a modified two-seat F-16D that was tested last year.

"The machine learning approach relies on analyzing historical data to make informed decisions for both present and future situations, often discovering insights that are imperceptible to humans or challenging to express through conventional rule-based languages. Machine learning is extraordinarily powerful in environments and situations where conditions fluctuate dynamically making it difficult to establish clear and robust rules."

"Enabling a pilot-optional aircraft like the X-62A to dogfight against a real human opponent who is making unknowable independent decisions is exactly the 'environments and situations' being referred to here. Mock engagements like this can be very dangerous even for the most highly trained pilots given their unpredictability."

"Trust in the ACE algorithms is set to be put to a significant test later this year when Secretary of the Air Force Frank Kendall gets into the cockpit for a test flight."

"I'm going to take a ride in an autonomously flown F-16 later this year. There will be a pilot with me who will just be watching, as I will be, as the autonomous technology works, and hopefully, neither he nor I will be needed to fly the airplane."

ACE stands for "Air Combat Evolution". It's a Defense Advanced Research Projects Agency (DARPA) program.

Article has lots of links to more information on AI in fighter jets. |

|

|

"The tiny ultrabright laser that can melt steel".

Allegedly in 2016, the Japanese government announced a plan for Society 5.0. If you're wondering what the first 4 were, they were 1: hunter/gatheres, 2: agrarian, 3: industrial, 4: the information age -- the end of which is fast approaching! Bringing us to Society 5: on-demand physical products. (Also robot caretakers, but never mind that -- you need lasers for on-demand physical products!)

"The lasers of Society 5.0 will need to meet several criteria. They must be small enough to fit inside everyday devices. They must be low-cost so that the average metalworker or car buyer can afford them -- which means they must also be simple to manufacture and use energy efficiently. And because this dawning era will be about mass customization (rather than mass production), they must be highly controllable and adaptive."

"Semiconductor lasers would seem the perfect candidates, except for one fatal flaw: They are much too dim."

"Of course, other types of lasers can produce ultrabright beams. Carbon dioxide and fiber lasers, for instance, dominate the market for industrial applications. But compared to speck-size semiconductor lasers, they are enormous. A high-power CO2 laser can be as large as a refrigerator. They are also more expensive, less energy efficient, and harder to control."

"Over the past couple of decades, our team at Kyoto University has been developing a new type of semiconductor laser that blows through the brightness ceiling of its conventional cousins. We call it the photonic-crystal surface-emitting laser, or PCSEL (pronounced 'pick-cell'). Most recently, we fabricated a PCSEL that can be as bright as gas and fiber lasers -- bright enough to quickly slice through steel -- and proposed a design for one that is 10 to 100 times as bright. Such devices could revolutionize the manufacturing and automotive industries. If we, our collaborating companies, and research groups around the world can push PCSEL brightness further still, it would even open the door to exotic applications like inertial-confinement nuclear fusion and light propulsion for spaceflight."

That paragraph doesn't mention the size. 3 mm. As in, 3 millimeters. A lot smaller than a refrigerator.

The rest of the article describes -- pretty decently for lay people -- how these PCSEL lasers work.

"At certain wavelengths determined by the groove spacing, light refracts through and partially reflects off each interface. The overlapping reflections combine to form a standing wave that does not travel through the crystal."

"In a square lattice such as that used in PCSELs, air holes bend light backward and sideways. The combination of multiple such diffractions creates a 2D standing wave. In a PCSEL, only this wave is amplified in the active layer, creating a laser beam of a single wavelength." |

|

|

Sheets of gold that are one atom thick have been synthesized.

They're calling it "goldene", to make you think of "graphene," "the iconic atom-thin material made of carbon that was discovered in 2004."

"Since then, scientists have identified hundreds more of these 2D materials. But it has been particularly difficult to produce 2D sheets of metals, because their atoms have always tended to cluster together to make nanoparticles instead."

"Researchers have previously reported single-atom-thick layers of tin and lead stuck to various substances, and they have produced gold sheets sandwiched between other materials. But 'we submit that goldene is the first free-standing 2D metal, to the best of our knowledge', says materials scientist Lars Hultman at Linköping University in Sweden."

"The Linköping researchers started with a material containing atomic monolayers of silicon sandwiched between titanium carbide. When they added gold on top of this sandwich, it diffused into the structure and exchanged places with the silicon to create a trapped atom-thick layer of gold. They then etched away the titanium carbide to release free-standing goldene sheets that were up to 100 nanometres wide, and roughly 400 times as thin as the thinnest commercial gold leaf."

"That etching process used a solution of alkaline potassium ferricyanide known as Murukami's reagent. 'What's so fascinating is that it's a 100-year-old recipe used by Japanese smiths to decorate ironwork,' Hultman says. The researchers also added surfactant molecules -- compounds that formed a protective barrier between goldene and the surrounding liquid -- to stop the sheets from sticking together."

"Light can generate waves in the sea of electrons at a gold nanoparticle's surface, which can channel and concentrate that energy. This strong response to light has been harnessed in gold photocatalysts to split water to produce hydrogen, for instance. Goldene could open up opportunities in areas such as this, Hultman says, but its properties need to be investigated in more detail first." |

|

|

Injection molding vs 3D printing. A 2-cavity tool injection molding machine competes with 4 Form 4 3D printers in a race to make 1,000 parts, which are simple plastic latches. If it sounds a bit unfair that 1 injection molding machine goes up against 4 3D printers, they show the 3D printers cost less and take up less floor space. The also require less lead time, as in, not any, vs weeks for the injection molding machine.

See below for keynote address explaining more of how the Form 4 3D printers work. |

|

|

Pulsed charging enhances the service life of lithium-ion batteries.

"Lithium-ion batteries are powerful, and they are used everywhere, from electric vehicles to electronic devices. However, their capacity gradually decreases over the course of hundreds of charging cycles. The best commercial lithium-ion batteries with electrodes made of so-called NMC532 and graphite have a service life of up to eight years. Batteries are usually charged with a constant current flow. But is this really the most favourable method? A new study by Prof Philipp Adelhelm's group at Helmholtz-Zentrum Berlin and Humboldt-University Berlin answers this question clearly with no."

I skipped the chemical formula for NMC532 but it's in the article. Basically a lithium, nickel, manganese, cobalt compound.

"Part of the battery tests were carried out at Aalborg University. The batteries were either charged conventionally with constant current or with a new charging protocol with pulsed current. Post-mortem analyses revealed clear differences after several charging cycles: In the constant current samples, the solid electrolyte interface at the anode was significantly thicker, which impaired the capacity. The team also found more cracks in the structure of the NMC532 and graphite electrodes, which also contributed to the loss of capacity. In contrast, pulsed current-charging led to a thinner solid electrolyte interface and fewer structural changes in the electrode materials."

"Helmholtz-Zentrum Berlin researcher Dr Yaolin Xu then led the investigation into the lithium-ion cells at Humboldt University and BESSY II with operando Raman spectroscopy and dilatometry as well as X-ray absorption spectroscopy to analyse what happens during charging with different protocols. Supplementary experiments were carried out at the PETRA III synchrotron. 'The pulsed current charging promotes the homogeneous distribution of the lithium ions in the graphite and thus reduces the mechanical stress and cracking of the graphite particles. This improves the structural stability of the graphite anode,' he concludes. The pulsed charging also suppresses the structural changes of NMC532 cathode materials with less Ni-O bond length variation."

BESSY stands for "Berliner Elektronenspeicherring-Gesellschaft für Synchrotronstrahlung". In English, "Berlin Electron Storage Ring Society for Synchrotron Radiation". Which would be BESRSSR. Never mind. Yes, "Electron Storage Ring" got all smashed together into "Elektronenspeicherring" in the German. "Society" seems like an odd part of the name in English. "Gesellschaft" can be translated "society" but could also be "company". Maybe "organization" would be a better word. Synchrotron radiation is a type of radiation emitted in particle accelerators when charged particles are pushed into going in circles or curved trajectories instead of being allowed to go in straight lines like they want to, and apparently requires "relativistic" speeds, which I take to mean, charged particles near the speed of light.

Raman spectroscopy is a type of spectroscopy where, I'm not sure exactly how it works. You transmit a fixed wavelength of light but the vibrations of the molecules nudge the energy levels up or down such that the molecules will or won't absorb and re-emit ("scatter") the photons. Not sure how the word "dilatometry" fits into all this. "Dilatometry" just means the measurement of the amount of volume a material takes up.

PETRA III is a particle accelerator in Hamburg run by DESY, the German government's national organization for fundamental physics research. DESY stands for "Deutsches Elektronen-Synchrotron" and PETRA stands for "Positron-Electron Tandem Ring Accelerator" (apparently doesn't have both German and English versions?).

All in all, a pretty sophisticated amount of analysis of battery recharging.

Still, the optimal frequency is not known. |

|

|

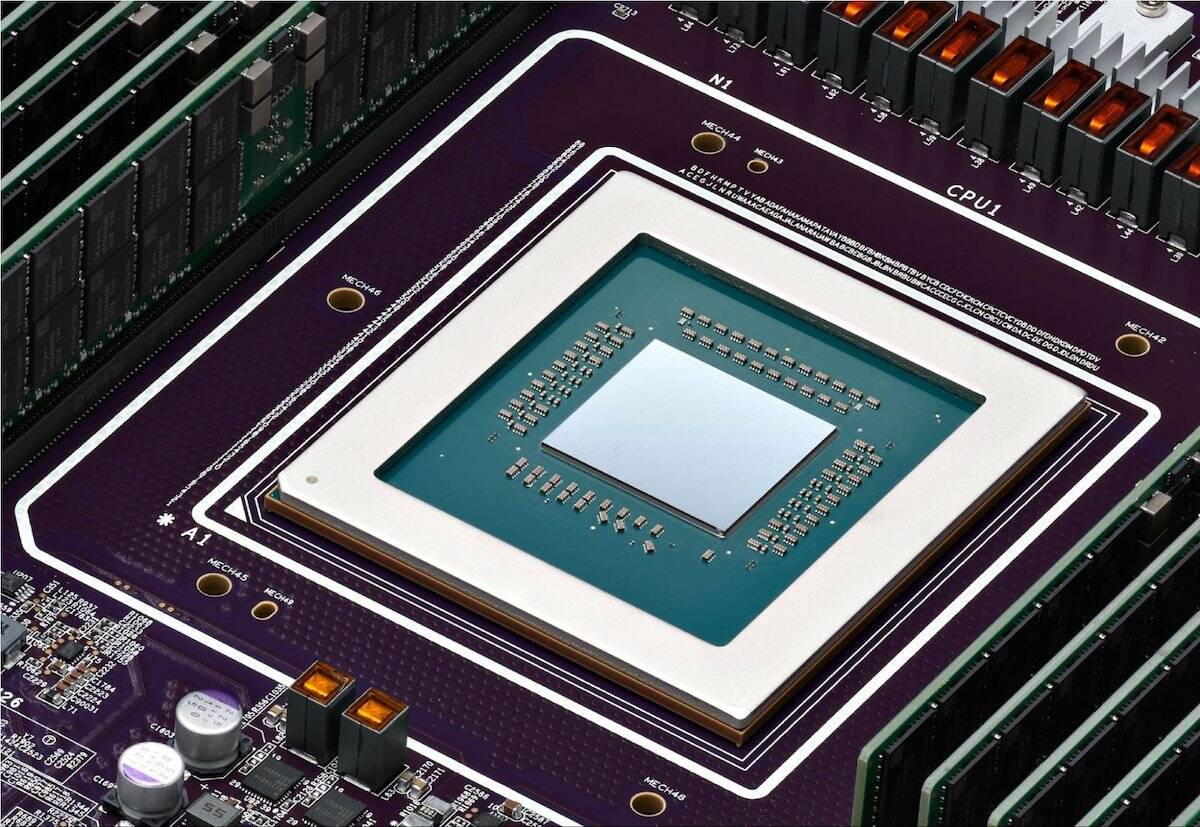

"Google joins the custom server CPU crowd with Arm-based Axion chips."

"Google is the latest US cloud provider to roll its own CPUs. In fact, it's rather late to the party. Amazon's Graviton processors, which made their debut at re:Invent in 2018, are now on their fourth generation. Meanwhile, Microsoft revealed its own Arm chip, dubbed the Cobalt 100, last fall."

"The search giant has a history of building custom silicon going back to 2015. However, up to this point most of its focus has been on developing faster and more capable Tensor Processing Units (TPUs) to accelerate its internal machine learning workloads." |

|

|

"Airchat is a new social media app that encourages users to 'just talk.'" "Visually, Airchat should feel pretty familiar and intuitive, with the ability to follow other users, scroll through a feed of posts, then reply to, like, and share those posts. The difference is that the posts and replies are audio recordings, which the app then transcribes."

What do y'all think? You want an audio social networking app? |

|

|

"One of the most common concerns about AI is the risk that it takes a meaningful portion of jobs that humans currently do, leading to major economic dislocation. Often these headlines come out of economic studies that look at various job functions and estimate the impact that AI could have on these roles, and then extrapolates the resulting labor impact. What these reports generally get wrong is the analysis is done in a vacuum, explicitly ignoring the decisions that companies actually make when presented with productivity gains introduced by a new technology -- especially given the competitive nature of most industries."

Says Aaron Levie, CEO of Box, a company that makes large-enterprise cloud file sharing and collaboration software.

"Imagine you're a software company that can afford to employee 10 engineers based on your current revenue. By default, those 10 engineers produce a certain amount of output of product that you then sell to customers. If you're like almost any company on the planet, the list of things your customers want from your product far exceeds your ability to deliver those features any time soon with those 10 engineers. But the challenge, again, is that you can only afford those 10 engineers at today's revenue level. So, you decide to implement AI, and the absolute best case scenario happens: each engineer becomes magically 50% more productive. Overnight, you now have the equivalent of 15 engineers working in your company, for the previous cost of 10."

"Finally, you can now build the next set of things on your product roadmap that your customers have been asking for."

Read the comments, too. There is some interesting discussion, uncommon for Twitter, apparently made possible by the fact that not just Aaron Levie but some other people forked over money to Twitter to be able to post things larger than some arbitrary and super-tiny character limit. |

|

|

"Evaluate LLMs in real time with Street Fighter III"

"A new kind of benchmark? Street Fighter III assesses the ability of LLMs to understand their environment and take actions based on a specific context. As opposed to RL models, which blindly take actions based on the reward function, LLMs are fully aware of the context and act accordingly."

"Each player is controlled by an LLM. We send to the LLM a text description of the screen. The LLM decide on the next moves its character will make. The next moves depends on its previous moves, the moves of its opponents, its power and health bars."

"Fast: It is a real time game, fast decisions are key"

"Smart: A good fighter thinks 50 moves ahead"

"Out of the box thinking: Outsmart your opponent with unexpected moves"

"Adaptable: Learn from your mistakes and adapt your strategy"

"Resilient: Keep your RPS high for an entire game"

Um... Alrighty then... |

|